ParaMgmt: Interacting with thousands of servers over SSH (part 1)

While working at Google in the Platforms Networking research group, I was tasked with running network performance benchmarks on large clusters of servers. Google has their own internal application scheduling system, but for unmentionable reasons, I couldn’t use this for my tests. I needed 100% control of the servers. I resulted to SSH and SCP.

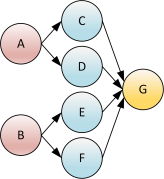

A common benchmark assigns some servers as senders and others as receivers. A typical test sequence would go something like this:

- Build the benchmark binaries on my local workstation.

- Copy the sender binary and receiver binary to the sender and receiver servers, respectively.

- Copy the configuration files to the servers.

- Run the test.

- Copy the results files from the servers back to my workstation.

- Parse and analyze the results.

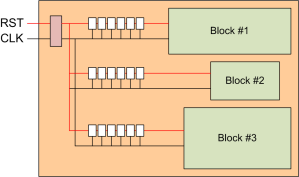

This process became VERY tedious. As a result, I wrote a software package to do this more efficiently and productively. It is called ParaMgmt, and it is now open-source on GitHub (https://github.com/google/paramgmt). ParaMgmt is a python package designed to ease the burden of interacting with many remote machines via SSH. The primary focus is on parallelism, good error handling, automatic connection retries, and nice viewable output. The abilities of ParaMgmt include running local commands, running remote commands, transferring files to and from remote machines, and executing local scripts on remote machines. This package includes command-line executables that wrap the functionality provided by the Python package.

The GitHub page describes how to install the software. The easiest method is to use pip and the GitHub link:

nic@myworkstation$ pip3 install --user \

> git+https://github.com/google/paramgmt.git

All you need to use the software is a list of remote hosts you want to interact with. I’ll be focusing on the command-line executables in this post, so let’s start by making a file containing our hosts:

nic@myworkstation$ cat << EOF >> hosts.txt

> 10.0.0.100

> 10.0.0.101

> 10.0.0.102

> EOF

Now that we have our hosts file, let’s run some remote commands. There are 6 command line executables:

- rhosts = Remote hosts – just prints each remote host.

- lcmd = Local command – runs commands locally for each remote host.

- rcmd = Remote command – runs commands remotely on each remote host.

- rpush = Remote push – pushes files to each remote host.

- rpull = Remote pull – pulls files from each remote host.

- rscript = Remote script – runs local scripts on each remote host.

First make sure you’ve setup key-based authentication with all servers (tutorial). Now let’s use the ‘rhosts’ executable to verify our hosts file, and also try adding more hosts on the command line.

nic@myworkstation$ rhosts -f hosts.txt 10.0.0.100 10.0.0.101 10.0.0.102 nic@myworkstation$ rhosts -f hosts.txt -m abc.com 123.com abc.com 123.com 10.0.0.100 10.0.0.101 10.0.0.102

Let’s verify that SSH works using the ‘rcmd’ executable:

nic@myworkstation$ rcmd -f hosts.txt -- whoami rcmd [10.0.0.100]: whoami stdout: nic rcmd [10.0.0.101]: whoami stdout: nic rcmd [10.0.0.102]: whoami stdout: nic 3 succeeded, 0 failed, 3 total

You can see that we remotely logged in and successfully executed the ‘whoami’ command on each host. All 3 connections executed in parallel. ParaMgmt uses coloring as a better way to view the output. In our example, the execution was successful, so the output is green. If the command output text to stderr, ParaMgmt will color the output yellow if the command still exited successfully, and red if it exited with error status. Upon an error, ParaMgmt also states how many attempts were made, the return code, and reports the hosts that failed.

nic@myworkstation$ rcmd -f hosts.txt -- 'echo some text 1>&2' rcmd [10.0.0.100]: echo some text 1>&2 stderr: some text rcmd [10.0.0.101]: echo some text 1>&2 stderr: some text rcmd [10.0.0.102]: echo some text 1>&2 stderr: some text 3 succeeded, 0 failed, 3 total

nic@myworkstation$ rcmd -f hosts.txt -- \ > 'echo some text 1>&2; false' rcmd [10.0.0.100]: echo some text 1>&2; false stderr: some text return code: 1 attempts: 1 rcmd [10.0.0.101]: echo some text 1>&2; false stderr: some text return code: 1 attempts: 1 rcmd [10.0.0.102]: echo some text 1>&2; false stderr: some text return code: 1 attempts: 1 0 succeeded, 3 failed, 3 total Failed hosts: 10.0.0.100 10.0.0.101 10.0.0.102

ParaMgmt has a great feature that makes it extremely useful, namely automatic retries. Commands in ParaMgmt will automatically retry when an SSH connection fails. This hardly ever occurs when you are communicating with only 3 servers, but when you use ParaMgmt to connect to thousands of servers potentially scattered across the planet, all hell breaks loose. The automatic retry feature of ParaMgmt hides all the annoying network issues. It defaults to a maximum of 3 attempts, but this is configurable on the command line with the “-a” option.

Now that we can run remote commands, let’s try copying files to and from the remote machines:

nic@myworkstation$ rpush -f hosts.txt -d /tmp -- f1.txt rpush [10.0.0.100]: f1.txt => 10.0.0.100:/tmp rpush [10.0.0.101]: f1.txt => 10.0.0.101:/tmp rpush [10.0.0.102]: f1.txt => 10.0.0.102:/tmp 3 succeeded, 0 failed, 3 total nic@myworkstation$ rpull -f hosts.txt -d /tmp -- \ > /tmp/f2.txt /tmp/f3.txt rpull [10.0.0.100]: 10.0.0.100:{/tmp/f2.txt,/tmp/f3.txt} => /tmp rpull [10.0.0.101]: 10.0.0.101:{/tmp/f2.txt,/tmp/f3.txt} => /tmp rpull [10.0.0.102]: 10.0.0.102:{/tmp/f2.txt,/tmp/f3.txt} => /tmp 3 succeeded, 0 failed, 3 total

As shown in this examples, ParaMgmt is able to push and pull many files simultaneously. ParaMgmt is also able to run a local script on a remote machine. You could do this by doing an rpush then an rcmd, but it is faster and cleaner to use ‘rscript’, as follows:

nic@myworkstation$ cat << EOF >> s1.sh > #!/bin/bash > echo -n "hello " > echo -n `whoami` > echo ", how are you?" > EOF nic@myworkstation$ rscript -f hosts.txt -- s1.sh rscript [10.0.0.100]: running s1.sh stdout: Welcome to Ubuntu 14.10 (GNU/Linux 3.16.0-39-generic x86_64) hello nic, how are you? rscript [10.0.0.101]: running s1.sh stdout: Welcome to Ubuntu 14.10 (GNU/Linux 3.16.0-39-generic x86_64) hello nic, how are you? rscript [10.0.0.102]: running s1.sh stdout: Welcome to Ubuntu 14.10 (GNU/Linux 3.16.0-39-generic x86_64) hello nic, how are you? 3 succeeded, 0 failed, 3 total

There is one more really cool feature of ParaMgmt I should cover. Often times, the remote hostname should be used in a command. For instance, after a benchmark has been run on all servers and you want to collect the data from the servers using the ‘rpull’ command, it would be nice if there was a corresponding local directory for each remote host. For this, we can use the ‘lcmd’ executable, with ParaMgmt’s hostname replacement feature. Any instance of “?HOST” in the command will be translated to the corresponding hostname. This works with all executables and is even applied on text within scripts used in the ‘rscript’ executable.

nic@myworkstation$ lcmd -f hosts.txt -- mkdir /tmp/res?HOST lcmd [10.0.0.100]: mkdir /tmp/res10.0.0.100 lcmd [10.0.0.101]: mkdir /tmp/res10.0.0.101 lcmd [10.0.0.102]: mkdir /tmp/res10.0.0.102 3 succeeded, 0 failed, 3 total

nic@myworkstation$ rpull -f hosts.txt -d /tmp/res?HOST -- res.txt rpull [10.0.0.100]: 10.0.0.100:res.txt => /tmp/res10.0.0.100 rpull [10.0.0.101]: 10.0.0.101:res.txt => /tmp/res10.0.0.101 rpull [10.0.0.102]: 10.0.0.102:res.txt => /tmp/res10.0.0.102 3 succeeded, 0 failed, 3 total

Here is an example of using the hostname auto-replacement in a script. I’ve just added the “?HOST” to the previous script example:

nic@myworkstation$ cat << EOF >> s1.sh > #!/bin/bash > echo -n "hello " > echo -n `whoami` > echo "@?HOST, how are you?" > EOF nic@myworkstation$ rscript -f hosts.txt -- s1.sh rscript [10.0.0.100]: running s1.sh stdout: Welcome to Ubuntu 14.10 (GNU/Linux 3.16.0-39-generic x86_64) hello nic@10.0.0.100, how are you? rscript [10.0.0.101]: running s1.sh stdout: Welcome to Ubuntu 14.10 (GNU/Linux 3.16.0-39-generic x86_64) hello nic@10.0.0.101, how are you? rscript [10.0.0.102]: running s1.sh stdout: Welcome to Ubuntu 14.10 (GNU/Linux 3.16.0-39-generic x86_64) hello nic@10.0.0.102, how are you? 3 succeeded, 0 failed, 3 total

ParaMgmt is fast and efficient. It handles all SSH connections in parallel freeing you from wasting your time on less-capable scripts. ParaMgmt’s command line executables are great resources to be used in all sorts of scripting environments. To really get the full usefulness of ParaMgmt, import the Python package into your Python program and unleash concurrent SSH connections to remote machines.